DeepSeek

Just kidding. It’s the Jevons paradox, not Jevon’s paradox. Maybe Jevons effect?

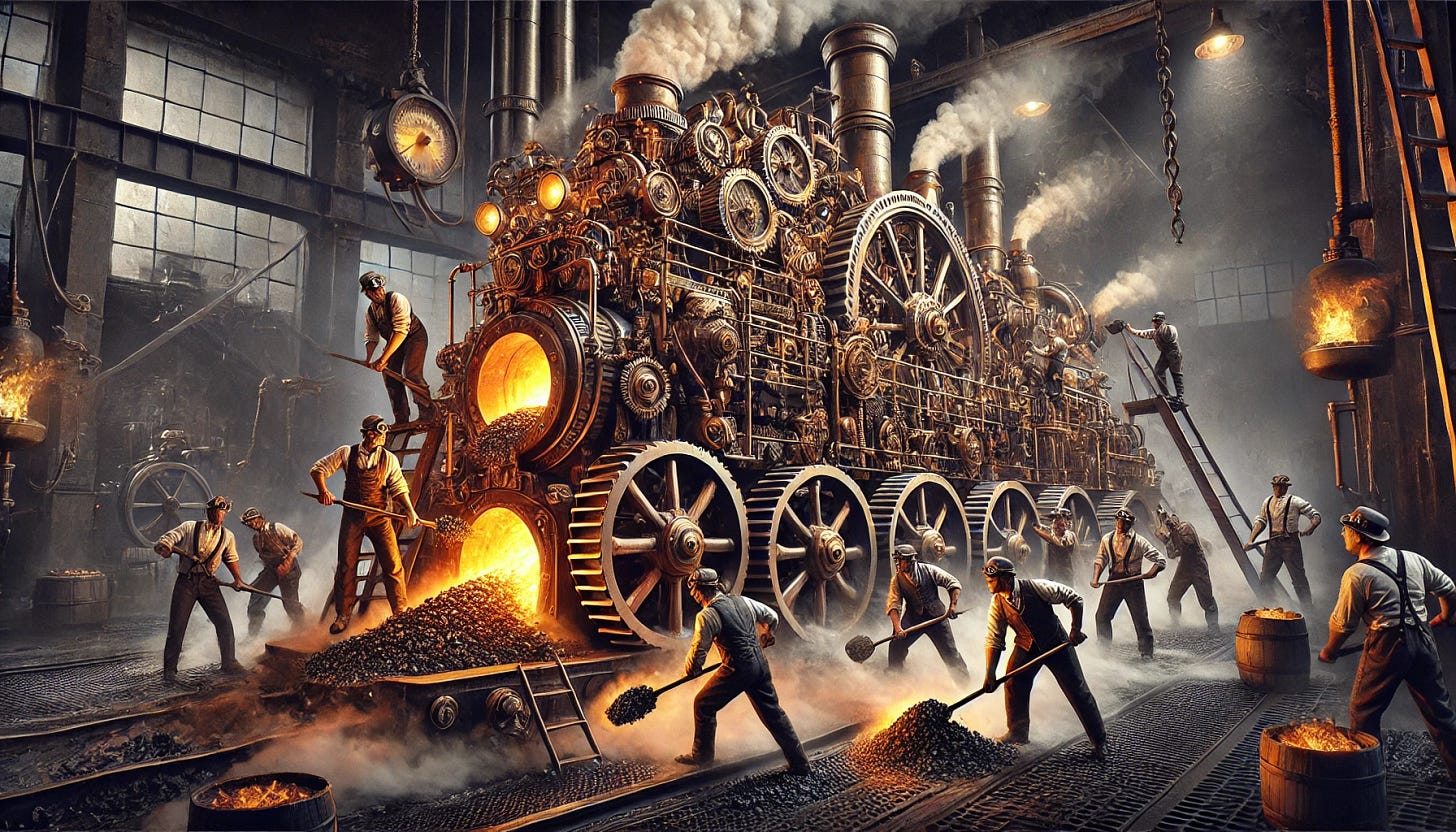

Imagine you live in little old England and the steam engine is invented. But it’s enormous and used to pump water out of stuff. The furnace is comically sized and it takes 10 men shoveling coal into it non-stop to keep it going. The coal industry employs tons of people to meet the demand for coal for these engines, because even though they kind of suck, they are way better than using buckets.

Then someone makes a breakthrough in steam engine tech, and you can pump the same amount of water with one dude shoveling one dude’s worth of coal instead of ten people.

“Oh no!” you might say, what about all of the people employed in mining coal, we now need 90% less coal, a bunch of them will be out of work.

But actually, the fact that your engine is smaller and more efficient means that not only do you use it to pump water in way more places, but other uses that weren’t worth have ten guys shovel coal for are now on the menu. There are 20x the amount of new steam engines as there used to be old ones, so they are actually using double the coal, and the coal industry has grown tremendously to meet the demand.

You can see how this wouldn’t be the obvious effect. The person who gets credit for noticing this is William Stanley Jevons.

Jevons, who first noticed this with coal and steam engines, is back in the news because of DeepSeek.

Other than being on the top of the app store and being described by some as the catalyst for Nvidia’s market cap declining by $600 billion (B), it is also the hot new LLM.

The nerds like it a lot because it shows you its chain of thought before it feeds you its answer. I do like that. But it is in the financial news because it is from China and because it was allegedly trained for like $5 million (M).

Now, it is important to know that nobody smart thinks the entire company/app/model known as DeepSeek were built for $5 million. Hundreds of millions or billions is probably more correct for the ballpark of the hardware and engineering, if one believes the $5 million, it is only claimed to be the marginal cost for training of part of the model.

But the model is great, seems to me to be about as good as the other premium models, and for free, and trained much more cheaply.

The Death of Moats (are greatly exaggerated)

Nvidia’s stock got hammered when DeepSeek took over the news cycle on the idea that more efficient use of processing is bad for the company. Jevons-bros think this is probably wrong, and that it seems hard to think that this success does anything to dampen demand for Nvidia chips. I am in the Jevons bro camp.

But also, a ton of people took to X to say this shows how weak the moats for AI/LLMs are, and I just think they are incredibly wrong. I think the moats are enormous, both in terms of capital and human talent and engineering knowledge required. I’d say the fact that it took this many years for an open source fast-follower to do something like this and make a splash shows how deep the moats are. It would be shocking if one great team of engineers couldn’t do something like this! If we see it happen 5 more times, I’ll change my tune. Also, the strongest moats have never been impenetrable. I remember when MySpace was the world’s moatiest moat, and wow how neat for Tom that he created the first social network and would never be displaced.

MySpace did have a great moat! But Facebook had a cracked founder and excellent execution on an improved product, and a bunch of luck, and now MySpace is gone and Meta is here.

And it is pretty clear that DeepSeek was able to catch up, but really didn’t seem to move the frontier at all (other than the brilliant idea to show chain of thought), which strengthens the moat idea.

Trump is Doing Tariffs (again)

The promised 25% tariffs on Mexico and Canada are officially in, though we will see how many dollars of goods end up being subject to them.

It is rare that so many smart people agree on something, but here, they do. Tariffs are neutral at best and very very bad at worst as a part of trade policy. The veneer of using them to reduce fentanyl imports seems like a joke that was required to let him use a loophole in the USMCA trade agreement, and this is almost certainly going to cause higher prices for people here in the US. We’ll see how Trump navigates what is likely to be inflationary pressure from this. His first term he had the lowest interest rates ever and inflation that wouldn’t budge above 2% no matter what monetary and fiscal policy looked like. I hope he has his fingers crossed.

Department of Government Efficiency

DOGE is causing havoc in the civil service. We’ll see how it plays out. They are doing what they said they would do.

Elon is clearly running the Elon playbook, which includes the rule:

“Delete any part or process you can. You may have to add them back later. In fact, if you do not end up adding back at least 10% of them, then you didn’t delete enough.”

As someone who thinks much of the government could in fact be deleted and be net positive even without a superior replacement (FDA delenda est), I am sympathetic to this idea.

It was interesting how many people circled around PEPFAR, which is an HIV/AIDS program that saves so many lives so cheaply that it seemed to trigger the utilitarian instincts of anybody who thinks any humanitarian aid should happen.

Lots of people were using PEPFAR as an example of how wrong DOGE is going already, and while it is certainly possible it will go wrong, this is definitely not something Elon Musk will consider a failure, in fact he probably sees it as just the opposite. He cut a bunch of stuff, and only a few things rose to the top as absolute travesties to stop, and they were reinstated before the stop ever went into effect.

Even if DOGE is a tremendous success, there will be much better opportunities for opponents to point out mistakes than this one.